Digital experience monitoring (DEM) is an emerging technology that goes beyond application performance monitoring (APM) and end user experience monitoring (EUEM). EUEM looks specifically at the human end-user or customer interaction with an application. APM focuses on the performance and availability of the application. Gartner defines DEM as the experience of all digital agents—human and machine—as they interact with enterprises’ application and service portfolios.

We have evolved from the days when performance was synonymous with page loading times. Now, we look at the perception of the user as far as the loading of the page is concerned. Users don’t care about what they can’t see ― they only care about what they can see and interact with on the page.

Book a demo today to see GlobalDots is action.

Optimize cloud costs, control spend, and automate for deeper insights and efficiency.

In this article we discuss digital experience monitoring and its importance in modern IT environment.

Digital experience monitoring

Monitoring has greatly evolved as a practice over the last few decades, as advancements in digital technology have changed the way that the world interacts with our websites, services and applications.

According to Catchpoint, things have drastically changed during the last decade with the growth and adoption of the following technologies:

- Cloud Computing

- Edge Computing (DNS, CDN, Load Balancing, SDWAN, WAF, etc.)

- Client-Side and Server-Side rendering

- APIs that enable deep integrations with third-party services

- Continuous Integration, Delivery, and Deployment

All these advancements have happened with the end user as the focal point.

Digital experience monitoring (DEM) is a newly defined area within the world of application performance monitoring (APM). Gartner has broken up APM into three “functional dimensions” for coverage in their magic quadrant market assessment tool: DEM, application discovery, tracing and diagnostics (ADTD), and application analytics (AA).

Gartner defines DEM as “an availability and performance monitoring discipline that supports the optimization of the operational experience and behavior of a digital agent, human or machine, as it interacts with enterprise applications and services. For the purposes of this evaluation, it includes real-user monitoring (RUM) and synthetic transaction monitoring (STM) for both web- and mobile-based end users.”

As a testament to the growing significance of the DEM market, Gartner will continue to include DEM in its evaluations of APM vendors but also break out DEM to keep track of its own market metrics.

Digital experience monitoring (DEM) software is used to discover, track, and optimize web-based resources and the end-user experience. These tools monitor traffic, user behavior, and a number of additional factors to help businesses understand their products’ performance and usability. DEM products integrate active, or simulated, traffic monitoring and real-user monitoring to analyze both theoretical performance and real-user experience. These tools provide analytical tools for examining and improving application and site performance. They also help businesses understand how visitors navigate through their site and discover where end-user experience appears to suffer.

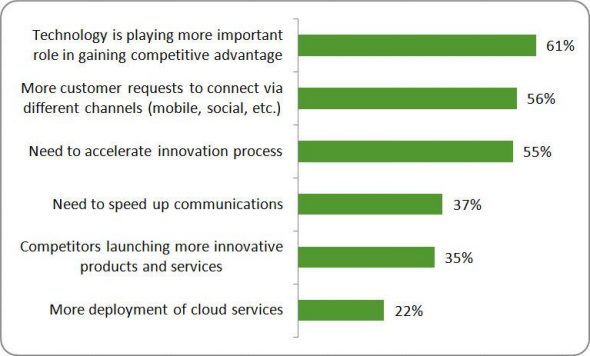

Failing to keep up with demands of digital consumers will drive companies behind, and today the majority of consumers are digital. With a DEM strategy you can find valuable performance insights in application performance data and user behavior. Survey results from Digital Enterprise Journal’s recent Digital Transformation Benchmark found that competition is increasingly important to organizations. Half of the statements have to do with competition.

The takeaway is that all enterprises that are undergoing digital transformation will need to put a solid digital experience monitoring plan in place to ensure optimal customer experience at every contact point. Embracing DevOps means prioritizing the experience for the end user and not just internal build processes. Those who do not focus on maintaining an optimal user-experience will watch as their customer bases churn away.

With Agile development, releases are happening at a much higher cadence, requiring companies to continuously test and optimize their applications to stay in business. Even with testing, code releases can cause unexpected performance degradation. Being notified about these as soon as possible leads to a faster resolution or roll back if needed. It isn’t possible to test every permutation of browser, device, and network provider which is why companies are including real user monitoring as a part of their DEM strategy.

Digital experience monitoring key elements

There are four major pillars of monitoring:

- Reachability

- Availability

- Performance

- Reliability

Reachability

Reachability means, “Are we able to reach point B from point A?” In the monitoring context it means, “Are the end users able to access the application? If not, what is preventing the end users from accessing the application?”

In the traditional IT world, monitoring reachability was simple. Since everything was hosted under one roof, enterprises just had to worry about end users being able to reach their datacenters.

Availability

In the monitoring context, availability is all about, “Is the end user able to access the application?” The reachability examples that we discussed are often considered as availability issues. Yet while there is certainly overlap between the two (i.e. availability can be impacted by reachability issues), there can be scenarios where end users are able to reach the end point, but the service is down.

Enterprises have traditionally focused on ensuring that their applications are up and responding to requests. If it’s a web application, then most monitoring tools look for HTTP 200 status with some form of basic validation.

Performance

Monitoring performance is important because a slower application has a significant impact on the brand, as end users don’t like slow applications. A slower application is actually more frustrating than an application that’s unavailable. Today, bad performance is the new downtime.

Performance monitoring has two key components:

- Establishing a baseline and alerting on any breaches

- Continuous improvement

Reliability

Reliability is delivering consistent application performance, reliability and reachability. This is the trickiest part of the monitoring methodology because you will need a monitoring tool that does the job of a data scientist. Data scientists in the performance monitoring world have the difficult job of:

- Understanding and defining business problems.

- Data acquisition: determining how the right data can be collected to help solve business problems

- Data Preparation: cleaning data, sorting, transforming, and modifying based on rules

- Exploratory Data Analysis: defining and refining the selection of different variables

- Data Visualization: powerful reports and dashboards

- Historical Data Storage: compare performance over long periods of time

Conclusion

Monitoring has greatly evolved as a practice over the last few decades, as advancements in digital technology have changed the way that the world interacts with our websites, services and applications. Digital experience monitoring (DEM) software is used to discover, track, and optimize web-based resources and the end-user experience.

If you have any questions about how we can help you optimize your costs and performance by using DEM, contact us today to help you out with your performance and security needs.