Sign up to our Newsletter

In the last few years, the term “bot” has become widey used. Everyone is talking about bots. We hear it used in politics, on social media, and when discussing website traffic.

Bad bots are an all-too-common and growing problem. But what specific damage are they doing on your website? And how does it impact your business?

How One AI-Driven Media Platform Cut EBS Costs for AWS ASGs by 48%

What is a Bad Bot?

Bad bots scrape data from sites without permission in order to reuse it (e.g., pricing, inventory levels) and gain a competitive edge. The truly nefarious ones undertake criminal activities, such as fraud and outright theft.

The Open Web Application Security Project (OWASP) provides a list of the different bad bot types in its Automated Threat Handbook.

Left unaddressed, bad bots cause very real business problems that could harm the success — or even the continuance — of your organization. Examining the problems doesn’t require deep knowledge of the technology behind attacks or the techniques used to prevent them. Instead it requires a solid understanding of your business.

Here are some quick bad bots facts:

- Every business with an online presence is regularly bombarded by bad bots on its website, APIs, or mobile apps.

- Unchecked bad bots cost businesses money every day. Different from the problem of data breaches, which are somewhat rare, automation abuse happens 24 × 7 × 365 because bad bots never sleep.

- Bad bots are on your website for a purpose. Understanding what that purpose is helps you address the problem.

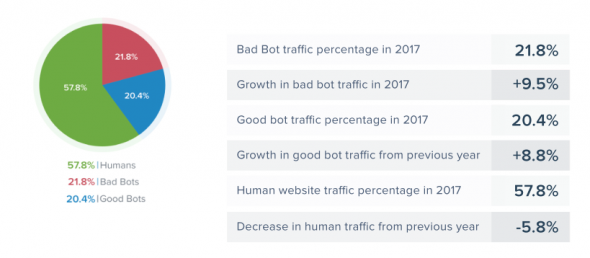

In 2017, 42.2% of all internet traffic wasn’t human, and there were significant year-over-year increases in both bad bot (+9.5%) and good bot (+8.8%) traffic.

Bad Bot sophistication levels

GlobalDots created the following industry standard system that classifies the sophistication level of the following four bad bot types:

- Simple — Connecting from a single, ISP-assigned IP address, this type connects to sites using automated scripts, not browsers, and doesn’t self-report (masquerade) as being a browser.

- Moderate — Being more complex, this type uses “headless browser” software that simulates browser technology—including the ability to execute JavaScript.

- Sophisticated — Producing mouse movements and clicks that fool even sophisticated detection methods, these bad bots mimic human behavior and are the most evasive. They use browser automation software, or malware installed within real browsers, to connect to sites.

- Advanced Persistent Bots (APBS) — APBs combine moderate and sophisticated technologies and methods to evade detection while

maintaining persistency on targeted sites. They tend to cycle through random IP addresses, enter through anonymous proxies and peer-to-peer networks, and are able to change their user agents.

You can read more about Bad Bots sophistication levels, their impact on various industries and a lot more in our Bad Bot Report 2018.

How Bad Bots hurt your website

Bots are tailored to target very specific elements of a website, but can affect more than just stolen content, spammed forms, or account logins. The Open Web Application Security Project (OWASP) published the Automated Threats Handbook for Web Applications, which profiles the Top 20 automated threats and categorizes each threat as one of four types:

- Account Credentials – Includes account aggregation, account creation, credential cracking, and credential stuffing.

- Payment Cardholder Data – Includes carding, card cracking, and cashing out.

- Vulnerability Identification – Includes footprinting, vulnerability scanning, and fingerprinting.

- Other – The catch-all category. Includes, ad fraud, CAPTCHA bypass, denial of service, expediting, scalping, scraping, skewing, sniping, spamming, and token cracking.

How to protect your website from bad bots

Every website is targeted for different reasons, so there’s no one-size-fits-all solution to the bot problem. There are, hoverer, certain steps you can take to make sure you’re protected from bad bots.

On its surface, a visit from a human and a bot may appear nearly identical. Bots can appear as normal users, with an IP address, browser and header data, and other seemingly identifiable information. But dig a bit deeper by collecting and reviewing in-depth analytics and other request data and you’ll be able to find the holes in the bots’ disguises.

Now that you’ve separated human traffic from bot traffic, you can dig a bit deeper to see which bots are good and which are bad. Good bots include search engine crawlers (Google, Bingbot, Yahoo Slurp, Baidu, and more) and social media crawlers (Facebook, LinkedIn, Twitter, and Google+). Generally, you want to allow these good bots access to your site, since they help humans find and access your site. Bad bots include any bots that are engineered for malicious use. These bots attempt scraping, brute force attacks, competitive data mining causing brownouts, account hijacking, and more.

Knowing the difference between the bots visiting your site lets you take action on bad bots and allow access to good bots.

Here’s overview of the things you must do before you can start protecting yourself from malicious bots.

Understand your vulnerabilities

Data is collected through every interaction and transaction online. Every business with a web presence is collecting sensitive data that might be of value to bad actors.

Businesses must continually evaluate and evolve their security measures to stay ahead of hackers. It’s crucial to understand the nature of the threat and have a clear plan of action to patch and protect their vulnerabilities online.

Tell the difference between bot protection myths and facts

In order to make informed and actionable decisions about the security in your business, it’s important to have the right information.

For example, you may have heard that all bots are bad. That’s not the case — there are plenty of bots that serve perfectly legitimate, even helpful functions.

It’s also often assumed that all bot attacks involve hacking. In fact, many bot attacks are simply probing for vulnerabilities that a hacker can exploit later.

Detect, categorize and control

Detecting bot traffic is the first step. Once bot traffic has been identified, the next step is to categorize the type of traffic. If it’s known bot traffic – like that of search engine bots – it should be allowed to pass. But known malicious bots, or bots of unknown intent, shouldn’t be allowed to pass.

Finally, the malicious bot traffic must be controlled. The type of bot mitigation required will depend on the type of attack. For a denial of service attack, your security software should simply divert the traffic. If the bot is looking for vulnerabilities or trying to commit fraud like shopping cart stuffing, the software should both deny access and return a false “page not found” 404 to the bot, to stave off future attacks from the same source.

Conclusion

Bad Bots are an increasing threat to enterprises worldwide. They’re often difficult to detect, and the damage they do can cripple a business. If you have any questions about how we can help you protect your website and business from bad bots, contact us today to help you out with your performance and security needs.