Sign up to our Newsletter

Developing software is hard. Even the largest, most successful companies can run into issues when developing new applications – first you have to develop dozens of libraries, packages and other software components, and then you have to make sure your software stacks are up to date, that they’re running smoothly, that they can be scaled according to business needs and so on.

How One AI-Driven Media Platform Cut EBS Costs for AWS ASGs by 48%

For many years now, the leading way to isolate and organize applications and their dependencies has been to place each application in its own virtual machine. Virtual machines make it possible to run multiple applications on the same physical hardware while keeping conflicts among software components and competition for hardware resources to a minimum.

But virtual machines are bulky—typically gigabytes in size. They don’t really solve problems like portability, software updates, or continuous integration and continuous delivery.

To resolve these issues, organizations have adopted Docker containers. Containers make it possible to isolate applications into small, lightweight execution environments that share the operating system kernel. Typically measured in megabytes, containers use far fewer resources than virtual machines and start up almost immediately. They can be packed far more densely on the same hardware and spun up and down en masse with far less effort and overhead.

Why should you use containers?

The old way to deploy applications was to install the applications on a host using the operating-system package manager. This had the disadvantage of entangling the applications’ executables, configuration, libraries, and lifecycles with each other and with the host OS. One could build immutable virtual-machine images in order to achieve predictable rollouts and rollbacks, but VMs are heavyweight and non-portable.

The new way is to deploy containers based on operating-system-level virtualization rather than hardware virtualization. These containers are isolated from each other and from the host: they have their own filesystems, they can’t see each others’ processes, and their computational resource usage can be bounded. They are easier to build than VMs, and because they are decoupled from the underlying infrastructure and from the host filesystem, they are portable across clouds and OS distributions.

Because containers are small and fast, one application can be packed in each container image. This one-to-one application-to-image relationship unlocks the full benefits of containers. With containers, immutable container images can be created at build/release time rather than deployment time, since each application doesn’t need to be composed with the rest of the application stack, nor married to the production infrastructure environment. Generating container images at build/release time enables a consistent environment to be carried from development into production.

Similarly, containers are vastly more transparent than VMs, which facilitates monitoring and management. This is especially true when the containers’ process lifecycles are managed by the infrastructure rather than hidden by a process supervisor inside the container. Finally, with a single application per container, managing the containers becomes tantamount to managing deployment of the application.

Benefits you get by using containers

Here is a summary of container benefits:

- Agile application creation and deployment: Increased ease and efficiency of container image creation compared to VM image use.

- Continuous development, integration, and deployment: Provides for reliable and frequent container image build and deployment with quick and easy rollbacks (due to image immutability).

- Dev and Ops separation of concerns: Create application container images at build/release time rather than deployment time, thereby decoupling applications from infrastructure.

- Observability Not only surfaces OS-level information and metrics, but also application health and other signals.

- Environmental consistency across development, testing, and production: Runs the same on a laptop as it does in the cloud.

- Cloud and OS distribution portability: Runs on Ubuntu, RHEL, CoreOS, on-prem, Google Kubernetes Engine, and anywhere else.

- Application-centric management: Raises the level of abstraction from running an OS on virtual hardware to running an application on an OS using logical resources.

- Loosely coupled, distributed, elastic, liberated micro-services: Applications are broken into smaller, independent pieces and can be deployed and managed dynamically – not a monolithic stack running on one big single-purpose machine.

- Resource isolation: Predictable application performance.

- Resource utilization: High efficiency and density

What is Kubernetes?

Kubernetes (commonly stylized as K8s) is an open-source container-orchestration system for automating deployment, scaling and management of containerized applications. It was originally designed by Google and is now maintained by the Cloud Native Computing Foundation. It aims to provide a “platform for automating deployment, scaling, and operations of application containers across clusters of hosts.

Kubernetes, at its basic level, is a system for running and coordinating containerized applications across a cluster of machines. It is a platform designed to completely manage the life cycle of containerized applications and services using methods that provide predictability, scalability, and high availability.

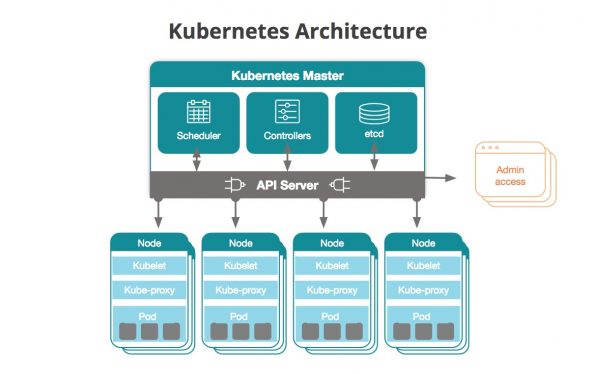

The central component of Kubernetes is the cluster. A cluster is made up of many virtual or physical machines that each serve a specialized function either as a master or as a node. Each node hosts groups of one or more containers (which contain your applications), and the master communicates with nodes about when to create or destroy containers. At the same time, it tells nodes how to re-route traffic based on new container alignments.

The following diagram depicts a general outline of a Kubernetes cluster:

As a Kubernetes user, you can define how your applications should run and the ways they should be able to interact with other applications or the outside world. You can scale your services up or down, perform graceful rolling updates, and switch traffic between different versions of your applications to test features or rollback problematic deployments. Kubernetes provides interfaces and composable platform primitives that allow you to define and manage your applications with high degrees of flexibility, power, and reliability.

Conclusion

Kubernetes is an open-source container-orchestration system for automating deployment, scaling and management of containerized applications. It gives you complete control over container orchestration, enabling you to deploy, maintain and scale application containers across a cluster of hosts. If you have any questions, contact us today to help you out with your performance and security needs.